|

This is a C++ implementation of the max-margin supervised topic models, including the variational algorithms presented in [1][2] and two Monte Carlo methods presented in [3].

We also provided a C++ implementation of the supervised LDA regression model presented in [5].

Downloads

-

Download the readme.txt

-

Download the source code of the variational algorithms presented in [1][2]: 1) Windows: MedLDAc.zip; and 2) Linux: medlda.zip

-

Download the source code of the collapsed Gibbs sampling and the importance sampling algorithms presented in [3]: 1) collapsed Gibbs sampling: MedLDA_Gibbs.zip; and 2) Importance sampling: MedLDA_IS.zip

-

Download the MedLDA regression model at: MedLDA_Regression

-

Download the supervised LDA regression model at: sLDA_Regression

Update News

-

Two Monte Carlo methods are published on Nov 18, 2012, based on the NIPS paper [3].

-

The implementation of MedLDA regression model and the supervised LDA regression model were published on Dec 02, 2011.

-

Bug on data loading and "est" option fixed on Dec 22nd, 2010. Thanks to Simon Lacoste-Julien and Michalis Raptis for reporting.

-

The Linux version of MedLDA classification model (version 1.0) is released on Aug 7th, 2010.

-

The MedLDA classification model (version 1.0) is released on Jul 8th, 2010.

Short Description

Supervised topic models utilize document's side information for discovering predictive low dimensional representations of documents.

Existing models apply the likelihood-based estimation. In this project, we present a general framework of max-margin supervised topic models

for both continuous and categorical response variables. Our approach, the maximum entropy discrimination latent Dirichlet allocation (MedLDA),

utilizes the max-margin principle to train supervised topic models

and estimate predictive topic representations that are arguably more suitable for prediction tasks. The general principle of MedLDA can be

applied to perform joint max-margin learning and maximum likelihood estimation for arbitrary topic models, directed or undirected, and supervised or unsupervised,

when the supervised side information is available. We develop efficient variational methods for posterior inference and parameter estimation, and demonstrate qualitatively and quantitatively

the advantages of MedLDA over likelihood-based topic models on movie review, hotel review and 20 Newsgroups data sets.

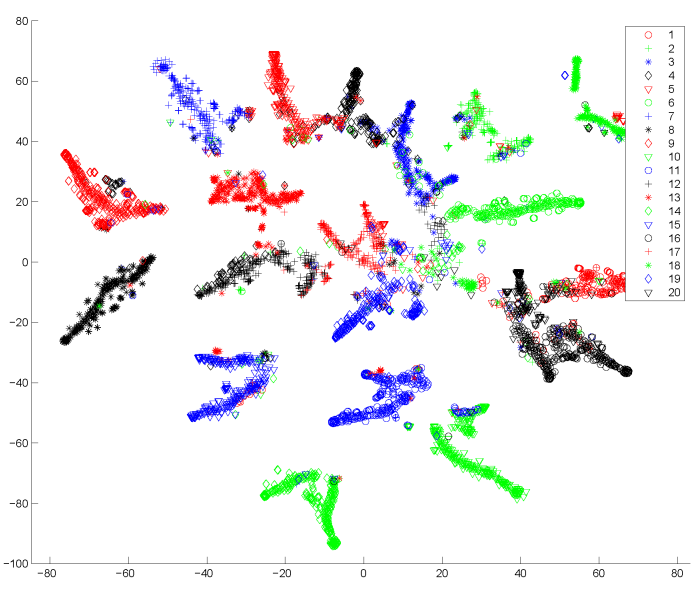

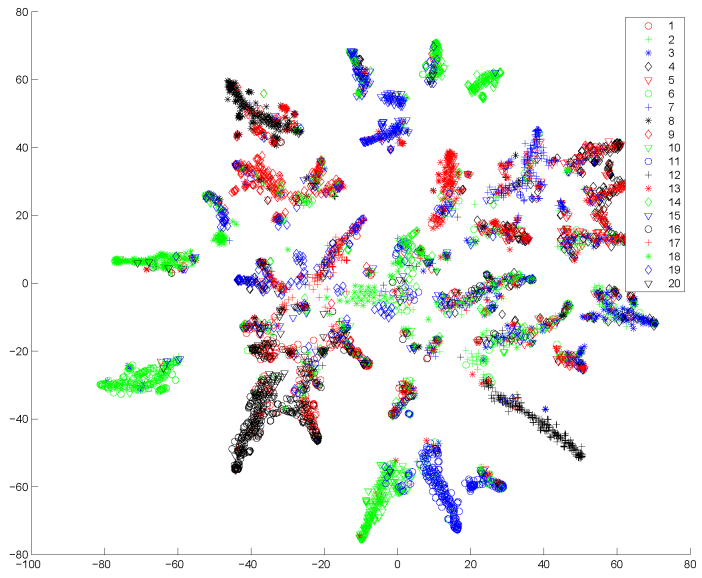

The following figures show the 2d embedding of the discovered latent representations on the

20newsgroup dataset by the MedLDA and standard unsupervised LDA. The 2d embeddings are achieved with the

t-SNE (t-Distributed Stochastic Neighbor Embedding) method.

|

|

| MedLDA |

LDA |

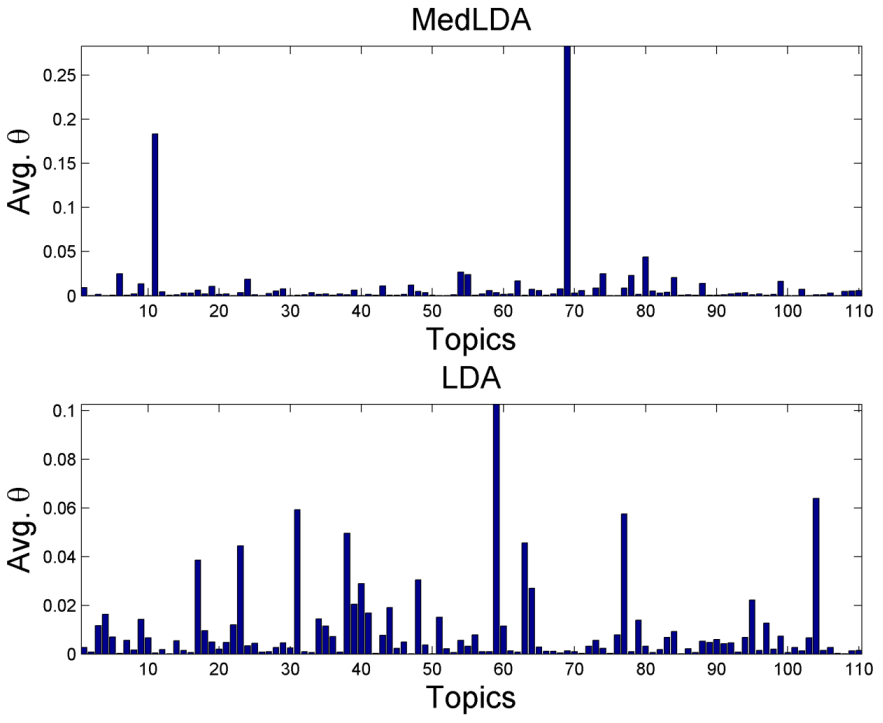

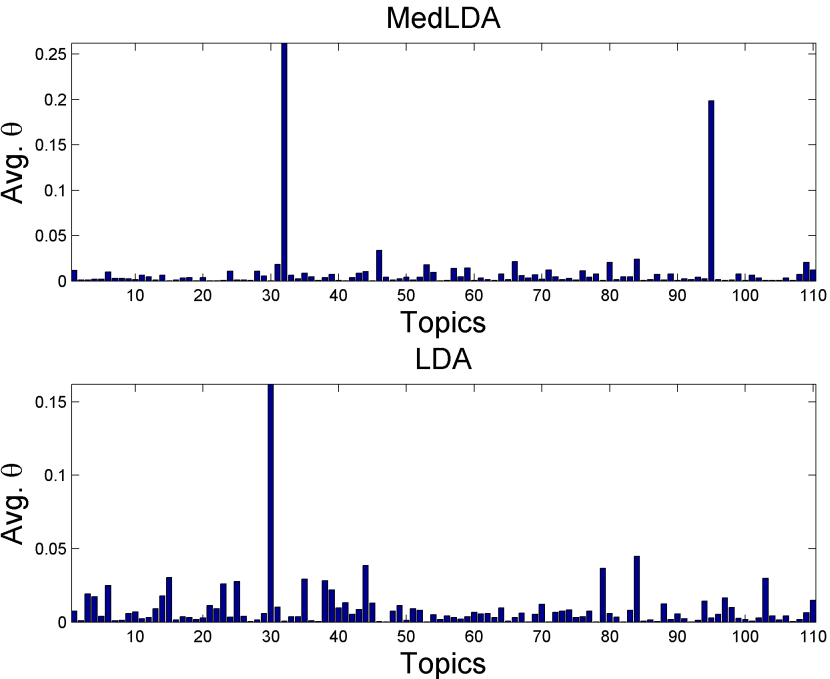

The following figures show the average latent representations for documents in the categories of "comp.graphics"

and "sci.electronics", respectively. We can see that the latent representations discovered by MedLDA is much sparser

and discriminative than those discovered by unsupervised LDA. More examples are provided in the paper.

| comp.graphics |

|

| sci.electronics |

|

References

-

Jun Zhu, Amr Ahmed, and Eric P. Xing. MedLDA: Maximum Margin Supervised Topic Models,

Journal of Machine Learning Research, 13(Aug):2237--2278, 2012.

-

Jun Zhu, Amr Ahmed, and Eric P. Xing. MedLDA: Maximum Margin Supervised Topic Models for Regression and Classification,

In ICML, Montreal, Canada, 2009.

-

Qixia Jiang, Jun Zhu, Maosong Sun, and Eric P. Xing. Monte Carlo Methods for Maximum Margin Supervised Topic Models,

In NIPS, Lake Tahoe, USA, 2012.

-

Jun Zhu and Eric P. Xing. Conditional Topic Random Fields,In ICML, Haifa, Israel, 2010.

-

David Blei and Jon D. McAuliffe. Supervised Topic Models,In NIPS, Vancouver, CA, 2007.

|